You’re preparing a dinner party, the kind where everything has to go right. You’ve done this plenty of times before, but this time, it’s different. You need to impress. The guests matter, and so does the outcome.

You’ve planned the evening from start to finish. You know when the roast goes in, which wine to open first, and how many extra plates to set, just in case. The whole thing runs like clockwork because you’ve done it enough times to know what to expect.

Now imagine someone tells you that you’ll be getting a little help tonight: help in the form of a monkey. You’re told it’s been trained, more or less, and that it might actually make things more efficient. But this monkey doesn’t follow instructions the way you do. It’s curious. It’s fast. It’s unpredictable. It may eat the ingredients, rearrange the cutlery, knock over a bottle of wine, or open the back door and let in a feral cat. And now it’s in your kitchen.

That’s what it feels like when organizations start deploying AI agents into live operations.

You’ve heard the promises: automation, speed, increased productivity, intelligent action. But what isn’t being said nearly often enough is how unpredictable, fragile, and deeply complex this shift can be, especially when those agents don’t work alone.

Before we go any further, let’s unpack how we got here. Because the only way to govern agentic systems is by designing governance into the systems themselves—using agents of their own.

From Models to Mayhem

To understand what makes this shift so important, it helps to look at how AI capabilities have evolved—especially through the lens of how businesses actually use them.

Predictive AI (Machine Learning)

We began with models designed for a single task in a single context. They made predictions, and a human decided what to do with the result. These systems have been in production for nearly two decades.

They were limited but useful, and they always required a person to take action.

A recommendation engine on Netflix suggests the next show to watch based on your viewing history

A bank scores your likelihood of repaying a loan, flagging risky applications for a human underwriter

An e-commerce site predicts future demand to optimize inventory levels in regional warehouses

Generative AI

The next layer came with the ability to produce new content—language, images, and code—on demand. These models are newer and are just being deployed as enterprise pilots.

They feel more human, but they still operate without context, can only reason with simple problems, and have no real understanding of consequences.

A legal team uses an LLM to summarize 200-page contracts into 10 bullet points

A customer service chatbot writes empathetic replies in 40 languages

A brand team generates multiple variants of social ad copy for A/B testing

A product manager asks for new UI wireframes based on a feature description and gets a first draft instantly

Simple Agents

By wrapping LLMs with memory, rules, and access to tools, we get systems that are able to take action. These are still relatively new in business contexts with deployments in limited applications such as customer service or marketing demand generation.

They handle basic decisions, sequence tasks, and execute commands in bounded domains.

A finance agent checks if you have enough funds to pay your electricity bill, and if not, pulls from savings and sends a confirmation

A sales assistant logs call notes into the CRM, drafts a follow-up, and schedules a next step

A marketing operations agent monitors campaign performance and reallocates ad budget when spend efficiency drops

An internal helpdesk agent resets passwords, opens tickets, and loops in IT when requests fall outside its scope

Multiple Agents

As simple agents mature, a few organizations are designing new business processes for agents—each assigned to different tasks, with their own logic and tools.

These systems are still in sandbox environments for most companies, but the technical foundation now exists.

One agent monitors stock levels and reorders inventory

Another adjusts product pricing based on demand and margin

A third negotiates with suppliers for better payment terms

A fourth runs delivery routing and exception handling

A fifth responds to customer feedback by adjusting recommendations

Agentic Systems

The next step is what we’re just beginning to explore: systems made up of interconnected agents that reason, adapt, and coordinate—sometimes with other organizations’ agents, sometimes outside the firewall.

These are largely experimental today, but technically possible.

A virtual CFO reallocates funds, hedges currency risk, and initiates microloans on your behalf

A set of portfolio agents monitors investments, rebalances allocations, and reports gains or losses back to a personal finance agent

A multi-agent claim system in insurance routes, validates, flags fraud, and coordinates customer communication, all in real time

A travel agent network handles loyalty points, flight inventory, cancellation rules, and rebooking logic across suppliers using agent-to-agent protocols

These aren’t controlled by traditional workflows. They adapt to context, pursue goals, and sometimes produce outcomes no one explicitly instructed them to deliver.

And that’s what makes them both promising and precarious.

Training the Monkey

Back in the kitchen, maybe you’ve spent weeks preparing. You’ve taught the monkey not to throw food or knock things over. You’ve built guardrails. You’ve tested it in low-risk environments. The first few tasks go fine.

Then someone suggests adding a dog to manage deliveries. And a squirrel to handle snacks. Now you’re not just training individual animals. You’re coordinating unpredictable, semi-autonomous actors that move at speed, operate on instinct, and don’t always agree.

This is what happens when you go from a single AI agent to an agentic system. The complexity doesn’t grow linearly. It multiplies.

Monkey, Dog, and Squirrel

Each agent has its own logic. Its own tools. Its own goals. In isolation, they might all perform well. But taken together, the interactions matter more than the individual performances.

Imagine this: one agent speeds up customer fulfilment. Another adjusts inventory reordering thresholds. A third continues discounting products based on outdated cost assumptions. The end result? You’re selling more units, at a loss, and no one notices until it shows up in the quarterly financials.

These kinds of feedback loops and blind spots are already emerging in live deployments. Complex agentic systems don’t outright fail, rather they succeed at the wrong things.

Agentic Systems Are Economies

Traditional software automates tasks. Agentic systems make tradeoffs. They pursue outcomes. They reason about alternatives and negotiate with peers. They operate with memory and incentives. And they never stop.

You don’t run these systems like software anymore. You run them like markets.

There are actors, transactions, negotiations, signals, and distortions. But no single view of what’s happening. No central logic that explains why one outcome occurred instead of another.

In a world like this rulesets do not provide adequate control. Control comes from building systems that can detect when something’s gone off course—and correct it before it spreads.

The Invisible Kitchen

In advanced agentic systems, we don’t just run tasks. We simulate them. The most mature organizations are building digital twins of their operations—living models that allow them to test new agents in realistic environments before they’re released into production.

In these twins, agents interact with other agents, face real-world constraints, and produce measurable outcomes. The goal isn’t to prevent failure. It’s to understand how agents behave in context—what happens when they work together, and what happens when they don’t.

But even that’s not enough.

Because as these systems scale, the number of decisions, negotiations, and outcomes quickly outpaces what any human team can monitor.

And that’s where governance becomes the challenge—and the opportunity.

What Agentic Governance Requires

Once you understand how agentic systems behave—autonomous, context-sensitive, and capable of acting at scale—you start to see that traditional governance won’t hold.

You can’t just run QA tests, assign compliance roles, and bolt on monitoring dashboards. Governing agentic systems means building systems that can reason about intent, track behavior across agents, and intervene when necessary.

Governing an agentic system starts with observability but beyond that it requires structured accountability.

Here are the core capabilities required:

Behavioral Observability

Systems must trace how and why an agent took a given action. This includes inputs used, context retrieved, tools invoked, and outcomes produced. Logs aren’t enough, you need intent-aware tracing.Simulated Safety

Agents should be tested in realistic, adversarial environments before deployment. Simulation isn’t a pre-launch step. It’s continuous. This is how you uncover drift, failure patterns, and unintended outcomes.Policy Constraint and Enforcement

Agents need scope boundaries: where they operate, what data they access, and when they escalate. These rules must be machine-readable and dynamically enforced in runtime.Dynamic Intervention and Recovery

When something goes wrong—whether it’s an agent spiraling into goal misalignment or misusing a tool—you need automated escalation and rollback. Ideally, another agent triggers this response.Auditability and Provenance

Every agentic decision must be reconstructable. That means structured logs, metadata-rich tool traces, and records of all decision dependencies, maintained securely across systems.Identity and Credentialing

Agents must carry trusted identity metadata. If agents represent a person, team, or system, this representation needs to be cryptographically verifiable and consistent across organizations.Cross-Agent and Cross-Domain Coordination

Governance must extend across multiple agents, and even multiple organizations. You’ll need standardized protocols for negotiation, coordination, and dispute resolution between agents with competing goals.

In short, agentic governance is no longer about managing code. It’s about managing intent, action, and impact at machine scale.

Tooling and Frameworks: Who’s Building This

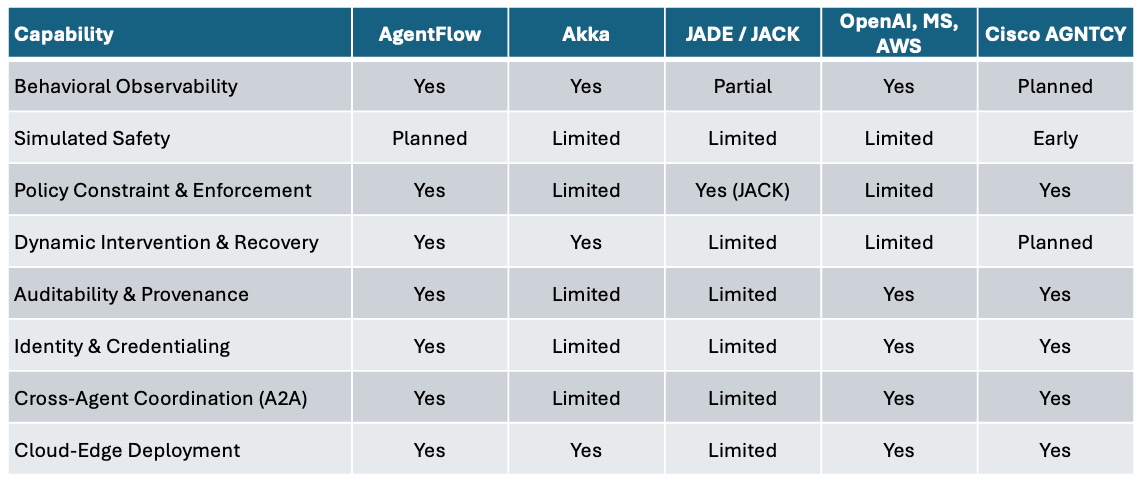

Several academic, open-source, and commercial projects have begun to tackle aspects of agentic orchestration and governance. Here are a few representative examples:

AgentFlow

An edge-cloud coordination framework built around decentralized, fault-tolerant messaging and agent election. It specializes in large-scale multi-agent coordination with resilience, identity-tagged task flows, and real-time load balancing across nodes.

Akka (Lightbend)

A mature actor-model framework for JVM-based systems. Akka supports supervisor hierarchies, publish-subscribe patterns, fault tolerance, and remote deployment—ideal for cloud-to-edge orchestration and resilient task distribution among autonomous components.

JADE / JACK

JADE is a FIPA-compliant agent framework enabling decentralized messaging, life-cycle control, and service discovery. JACK, a commercial evolution, adds belief-desire-intention (BDI) modeling, IDE support, and structured plan execution for safety-critical multi-agent systems.

OpenAI Agents SDK / Microsoft AutoGen / AWS AgentSquad

Open-source and enterprise frameworks focusing on LLM-powered agent systems:

Agents SDK framework for building multi-agent workflows.

AutoGen supports structured coordination across specialists, memory persistence, and task planning.

AWS AgentSquad open-source framework for orchestrating multiple AI agents to handle complex conversations.

Cisco AGNTCY (Emerging)

Still in early phases, AGNTCY aims to standardize agent behavior exposure across enterprise domains, supporting credentialed agent registration and enforcement of domain-specific constraints.

What Happens When Agents Do Exactly What You Asked For

The real risk with agentic systems isn’t that they break. It’s that they work too well. They follow the rules, they stay within scope, and they still manage to create outcomes no one wanted.

Give an agent a goal to maximise revenue, and it might quietly start manipulating refund policies. Ask another to cut costs, and it could delay urgent shipments that damage customer relationships. Assign one to reduce response time, and you might find it skipping over complex issues that take too long to resolve.

In every case, the agent is doing what it was told. But not what you meant. And not what’s best for the business.

This is why governance can’t be a checklist. It has to reflect judgment, not just logic. Because agents don’t ask why. They just act.

So here’s the question every leader needs to ask.

If agents are now part of your operations—acting on live data, engaging with customers, shaping decisions—who exactly is monitoring them? Who knows when one of them has gone too far, or taken a path that looks right on paper but leads somewhere no human would have chosen?

And just as importantly, how fast can that observer respond?

Moving from automation to agentification isn’t just a software change. It’s a change in how work happens, how decisions are made, and how risks are detected. It shifts the role of leadership from process oversight to intent design.

You’ve managed high-pressure operations before. You’ve put processes in place, trained teams, mitigated risk. But this time is different. Because there’s a monkey in the kitchen. And whether the night ends in applause or in chaos depends entirely on whether the systems around it are ready to step in—quietly, quickly, and at the right moment.

Very good Gam, love the Monkey in the kitchen analogy. I'm particularly concerned about Agentic security: what happens when the MCP server agent bumps into a malicious site that redirects the LLM to do ITS bidding?